Google’s new AI-powered Search Generative Experience

Google is close to making some of the biggest changes to its search results in decades, integrating what it’s calling an AI snapshot that summarises an answer to a user’s query.

At first glance it looks a bit like a featured snippet on steroids, but the truth is it’s much more than that. Where a featured snippet pulls out a sentence or two from an existing page, the AI snapshot generates a brand new answer – consolidating and summarising multiple sources from around the web.

Right now this is experimental – this Search Generative Experience (SGE) as Google are calling it will only be rolled out to those on a waitlist (you can sign up here, restricted to the US for now, unfortunately) – but it’s clear that this will eventually become part of Google’s main search offering.

This is the biggest, most interesting and most exciting change to Google’s search results in decades.

Other sites have covered the news itself in lots more detail. The coverage from The Verge is particularly good, as is this article on Search Engine Land – and it’s worth seeing it in action in the Keynote at Google IO.

What we know

The demo of Google’s new AI-powered SGE gave us a clear look at something we’d only speculated on before, and quite a few of our questions were answered. By far the biggest question – would Google cite its sources?

The AI snapshot links to sites

SEOs knew that something like this was coming, and the fear was always that Google (unlike Bing) wouldn’t cite their sources. The risk would be that Google would take all of your content, use it to train their AI, and then wouldn’t give you credit (in the form of search traffic).

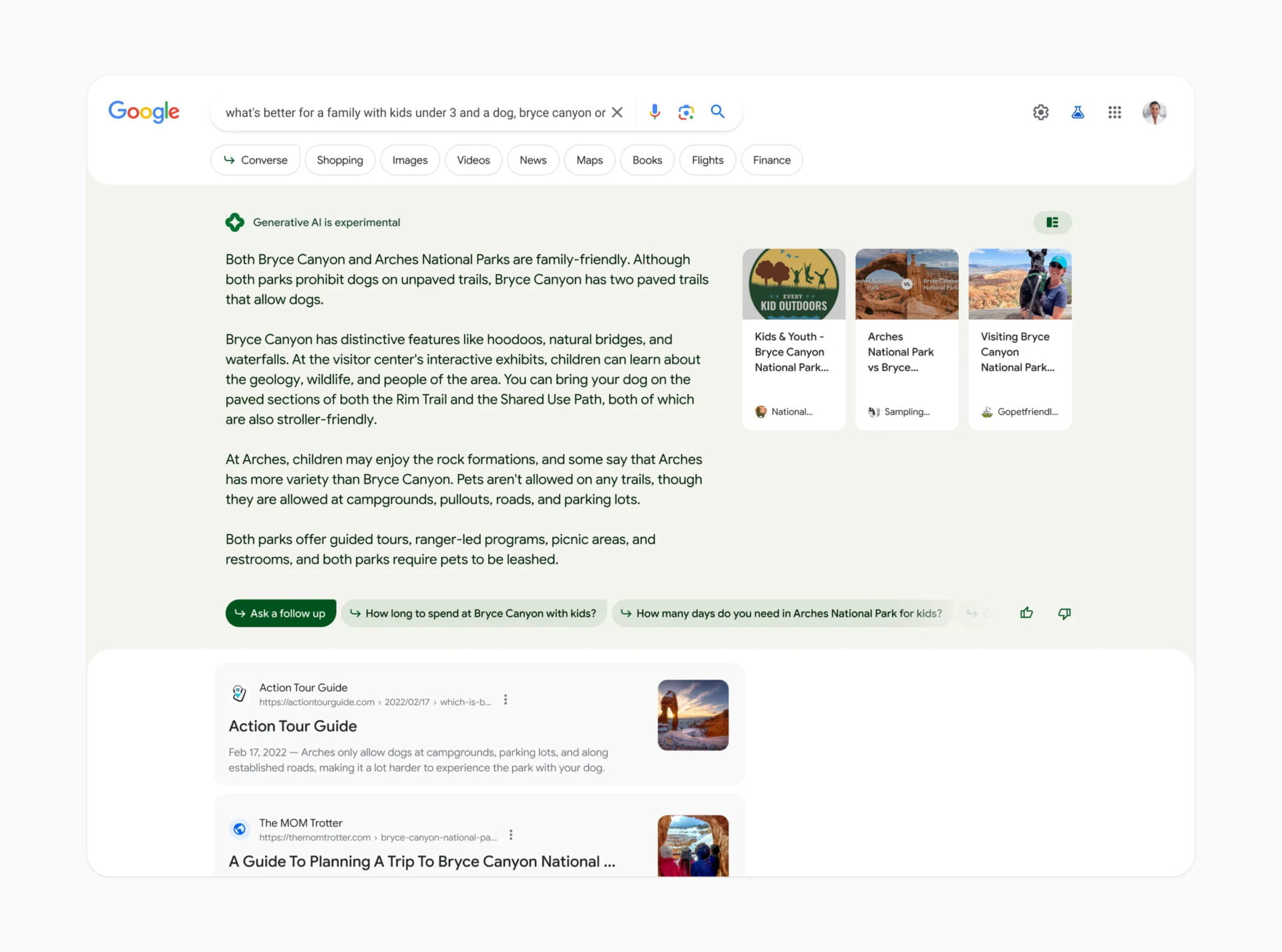

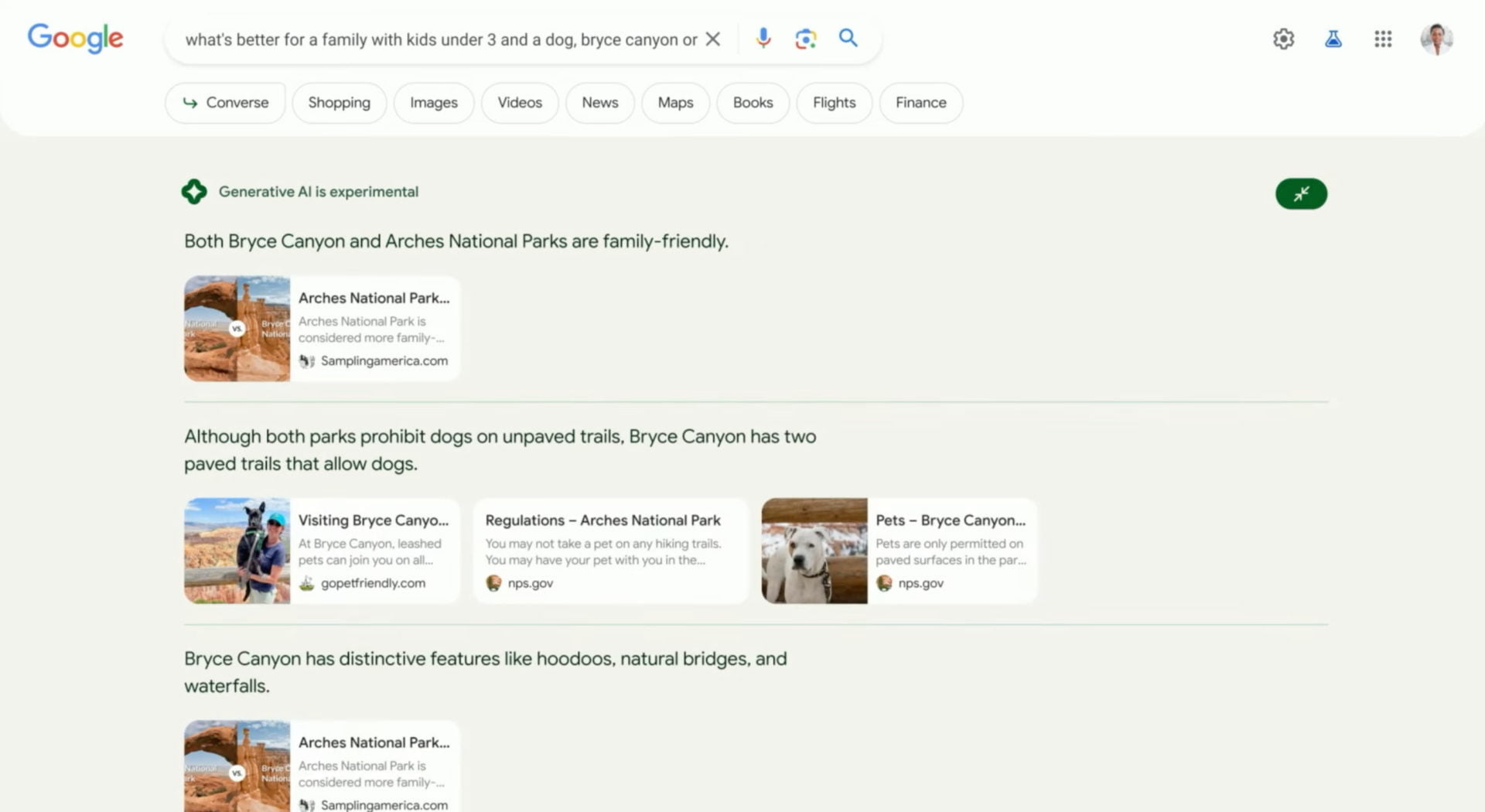

Fortunately for everyone, Google is very clearly and very prominently showing organic links to the sites that it’s using to build the answer from.

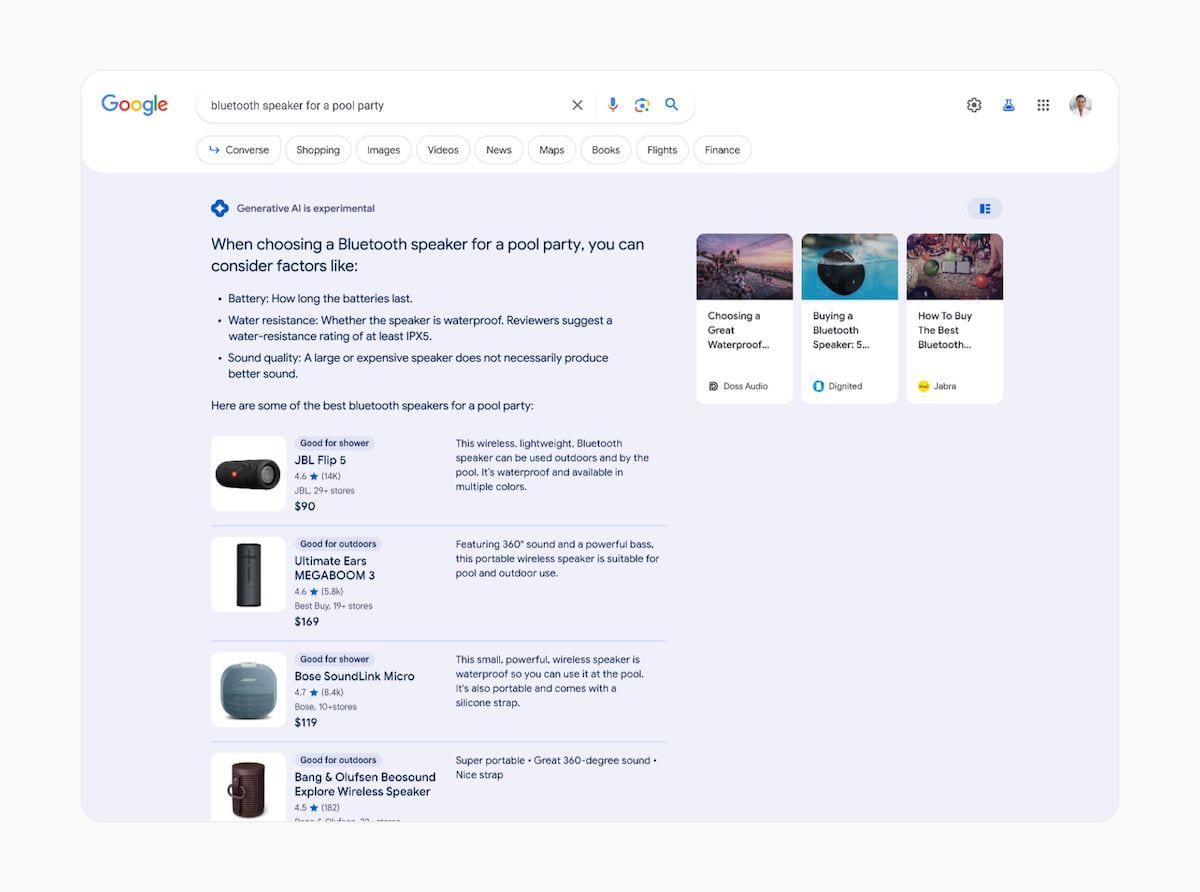

The AI snapshot clearly shows a selection of relevant results. And as great as it is to see organic results prominently shown above-the-fold, it’s also interesting to see that users can drill down to see other sources of data that Google used to build the summary.

These are all clear, visible ways to drive organic traffic to publisher sites.

It’s limited to certain queries

Google have said that – for now – they’re not rolling it out to anything finance or medical (basically anything that’s classified as Your Money or Your Life).

Presumably Google don’t want to risk misleading people or accidentally provide poor quality information on sensitive topics.

It’s integrated into results – not a separate section

It’s not a separate chat-bot that’s been shoved into a different tab. This is a large, visual block above-the-fold, that appears above the regular results.

What we don’t yet know

But while we’ve seen a glimpse of the future, there’s still a lot of things that we don’t yet know.

What effect will it have on search queries?

Google’s keynote made it seem like they expected that users would, over time, adapt and start asking much longer questions. The example they used was:

what’s better for a family with kids under 3 and a dog, bryce canyon or arches

That’s a long query. It’s a longer query than basically any we see appear in Search Console, and certainly longer than any real query we actively try to optimise our pages for.

Will users actually adapt and start writing long, complex queries like this? If so, we might end up finding much more variance in the types of queries that drive traffic to our pages.

This might not be such a bad thing. At the moment, there are entire industries that have a huge focus on very short tail queries. Car insurance. Credit cards. Mortgages. These are all insanely competitive, with the sites at the top getting most of the traffic, and working in niches like this can feel a little feast or famine.

A change in user behaviour with many varied search queries could result in a flattening of the short tail, and a longer long tail. There might be less traffic to the short tail queries, with more traffic going to the longer tail.

Will it lead to a drop in search traffic?

There’s no getting around the fact that the AI snapshot is massive. It takes up a lot of room, which means the standard 10 blue links get pushed even further down. If you’re in the 10 blue links for the query, there’s a good chance that your click-through rate will drop, resulting in less traffic for that search.

But it’s also not as simple as that.

If user behaviour does change and more searches are for long-tail queries, then it’s possible that search traffic gets shared across a more diverse, broad set of sites.

And we don’t know how much traffic you could get when you appear in the AI snapshot. We don’t know what that click-through rate might look like – but it’s entirely feasible that being featured there can drive a substantial amount of traffic.

How do you increase the chance that you’ll appear in the AI snapshot?

The AI snapshot is a huge change, but it’s unlikely that Google would throw out all of the signals and systems that it’s used to rank content on the web for decades. Content, links, user experience and the technical build of pages are all still extremely likely to be hugely important things to focus on.

But it is a change. Search Engine Land has a particularly interesting quote from Liz Reid, VP of Search at Google:

This new search experience “places even more emphasis on producing informative responses that are corroborated by reliable sources” – Source

That could mean that the AI snapshot uses links from trusted sites. But it could also mean that the snapshot doesn’t need to lean quite as heavily on links – maybe it’s better at seeing whether those same trusted sites simply mention your brand positively.

It’s possible that – to win in the AI snapshot – you’ll need publishers to corroborate the things you’re saying and recommend your products. And the way to do that is the same easily-said but hugely difficult task: build great products and do an insanely great job of promoting them.

How will we measure it?

Right now, we don’t know if we’ll be able to tell which visitors came from a click from an AI generated response vs a regular 10 blue links click. We’re not sure if we’ll be able to see clicks and impressions from the AI snapshot in Search Console (if you’re a Googler reading this – it would be amazing if we could).

But also – will users perform searches, see recommendations for your products in the AI snapshot, but not click-through there and then? Will they then consider the options and buy the product in-store, or by visiting your site directly at a later date? And if so – can that sale ever be accurately attributed?

Will users like the change?

This is probably the biggest question for me.

My initial feeling on the AI snapshot is that it has the potential to be really positive for users. Google has picked up criticism in the last few years – a general feeling that search results just aren’t as good as they used to be. Too many ads. Too much spam. Too many out-of-date, inaccurate articles ranking well.

If the AI snapshots are accurate, up-to-date, and do a good job of summarising a solid answer and direct users to the absolute best possible resources to learn more, then I could easily see this as being popular.

But again, it’s not as simple as that.

There’s the question of speed. The demos showed the initial 10 blue links load near-instantly, but several seconds for the AI snapshot to answer the query. Google knows better than anyone the impact that slow loading search results can have on user behaviour.

In a study from 2009, Google found that even very minor delays to showing search results led to reduced satisfaction amongst users.

All other things being equal, more usage, as measured by number of searches, reflects more satisfied users. Our experiments demonstrate that slowing down the search results page by 100 to 400 milliseconds has a measurable impact on the number of searches per user of -0.2% to -0.6% (averaged over four or six weeks depending on the experiment)… While these numbers may seem small, a daily impact of 0.5% is of real consequence at the scale of Google web search – Source

And that was observable when there were decreases of just 100ms – how big an impact will it be when the slowdown is 20 times that?

But perhaps the bigger question: will the results be accurate?

Google has published a 19 page overview of SGE (thanks to Carl Hendy for sharing) which mentions that the AI has several limitations. Sometimes it misinterprets things:

We have seen some instances where SGE has appropriately identified information to corroborate its snapshot, but with slight misinterpretations of language that change the meaning of the output

And – like all AIs – it’s not immune to hallucinating:

Like all LLM-based experiences, SGE may sometimes misrepresent facts or inaccurately identify insights

It’s an extremely difficult problem to solve – but it’s required if they want users to trust the results of the AI snapshot.

But ultimately, I think if anyone is able to solve these challenges, it’ll be Google. And if they can, I think this can be the most interesting, exciting and positive impact on search results for decades.

Image credit: Andy Holmes